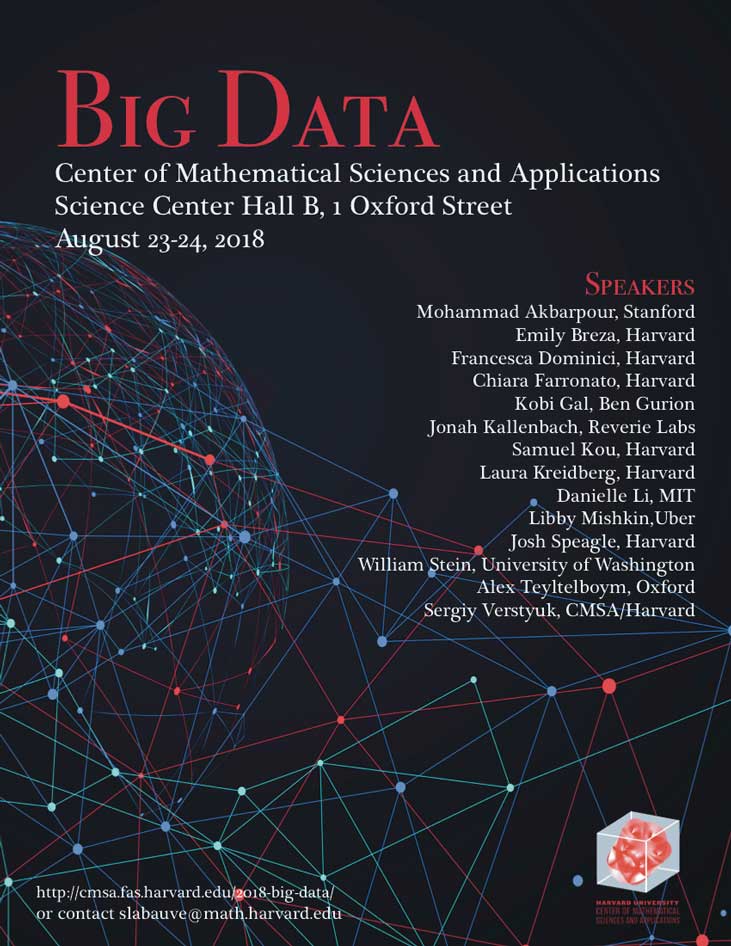

On August 23-24, 2018 the CMSA will be hosting our fourth annual Conference on Big Data. The Conference will feature many speakers from the Harvard community as well as scholars from across the globe, with talks focusing on computer science, statistics, math and physics, and economics.

The talks will take place in Science Center Hall B, 1 Oxford Street.

For a list of lodging options convenient to the Center, please visit our recommended lodgings page.

Please note that lunch will not be provided during the conference, but a map of Harvard Square with a list of local restaurants can be found by clicking Map & Restaurants.

Confirmed Speakers:

- Mohammad Akbarpour, Stanford

- Emily Breza, Harvard

- Francesca Dominici, Harvard

- Chiara Farronato, Harvard

- Kobi Gal, Ben Gurion

- Jonah Kallenbach, Reverie Labs

- Samuel Kou, Harvard

- Laura Kreidberg, Harvard

- Danielle Li, MIT

- Libby Mishkin, Uber

- Josh Speagle, Harvard

- William Stein, University of Washington

- Alex Teyltelboym, University of Oxford

- Sergiy Verstyuk, CMSA/Harvard

Organizers:

- Shing-Tung Yau, William Caspar Graustein Professor of Mathematics, Harvard University

- Scott Duke Kominers, MBA Class of 1960 Associate Professor, Harvard Business

- Richard Freeman, Herbert Ascherman Professor of Economics, Harvard University

- Jun Liu, Professor of Statistics, Harvard University

- Horng-Tzer Yau, Professor of Mathematics, Harvard University