- This event has passed.

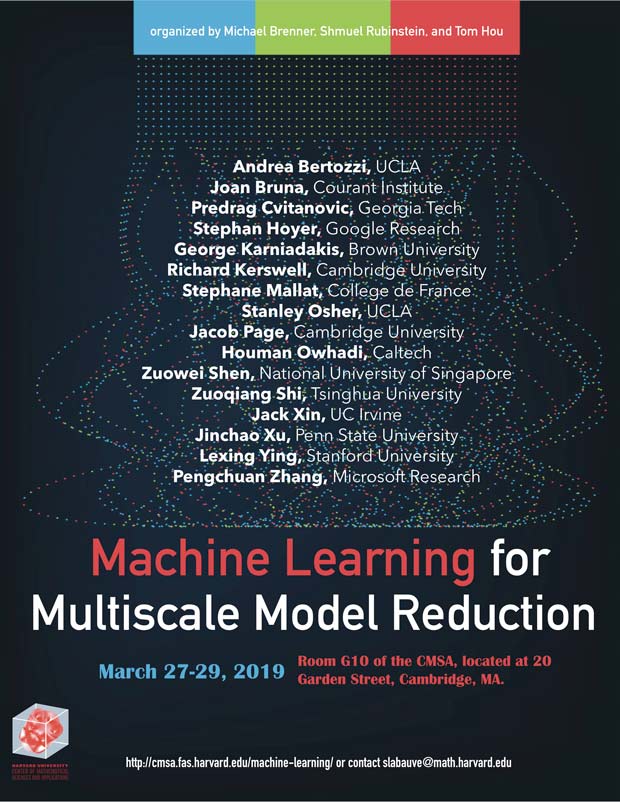

Machine Learning for Multiscale Model Reduction Workshop

March 27, 2019 @ 9:00 am - March 29, 2019 @ 11:55 am

The Machine Learning for Multiscale Model Reduction Workshop will take place on March 27-29, 2019. This is the second of two workshops organized by Michael Brenner, Shmuel Rubinstein, and Tom Hou. The first, Fluid turbulence and Singularities of the Euler/ Navier Stokes equations, will take place on March 13-15, 2019. Both workshops will be held in room G10 of the CMSA, located at 20 Garden Street, Cambridge, MA.

For a list of lodging options convenient to the Center, please visit our recommended lodgings page.

Speakers:

- Joan Bruna, Courant Institute

- Predrag Cvitanovic, Georgia Tech

- Stephan Hoyer, Google Research

- De Huang, Caltech

- George Karniadakis, Brown University

- Richard Kerswell, Cambridge University

- Stephane Mallat, ENS

- Stanley Osher, UCLA

- Jacob Page, Cambridge University

- Houman Owhadi, Caltech

- Zuowei Shen, National University of Singapore

- Jack Xin, UC Irvine

- Jinchao Xu, Penn State University

- Lexing Ying, Stanford University and Facebook AI Research

- Pengchuan Zhang, Microsoft Research