Program on Mathematical Aspects of Scattering Amplitudes

Mathematical Aspects of Scattering Amplitudes Program To receive email updates and program announcements, visit this...

Read More

Mathematical Aspects of Scattering Amplitudes Program To receive email updates and program announcements, visit this...

Read More

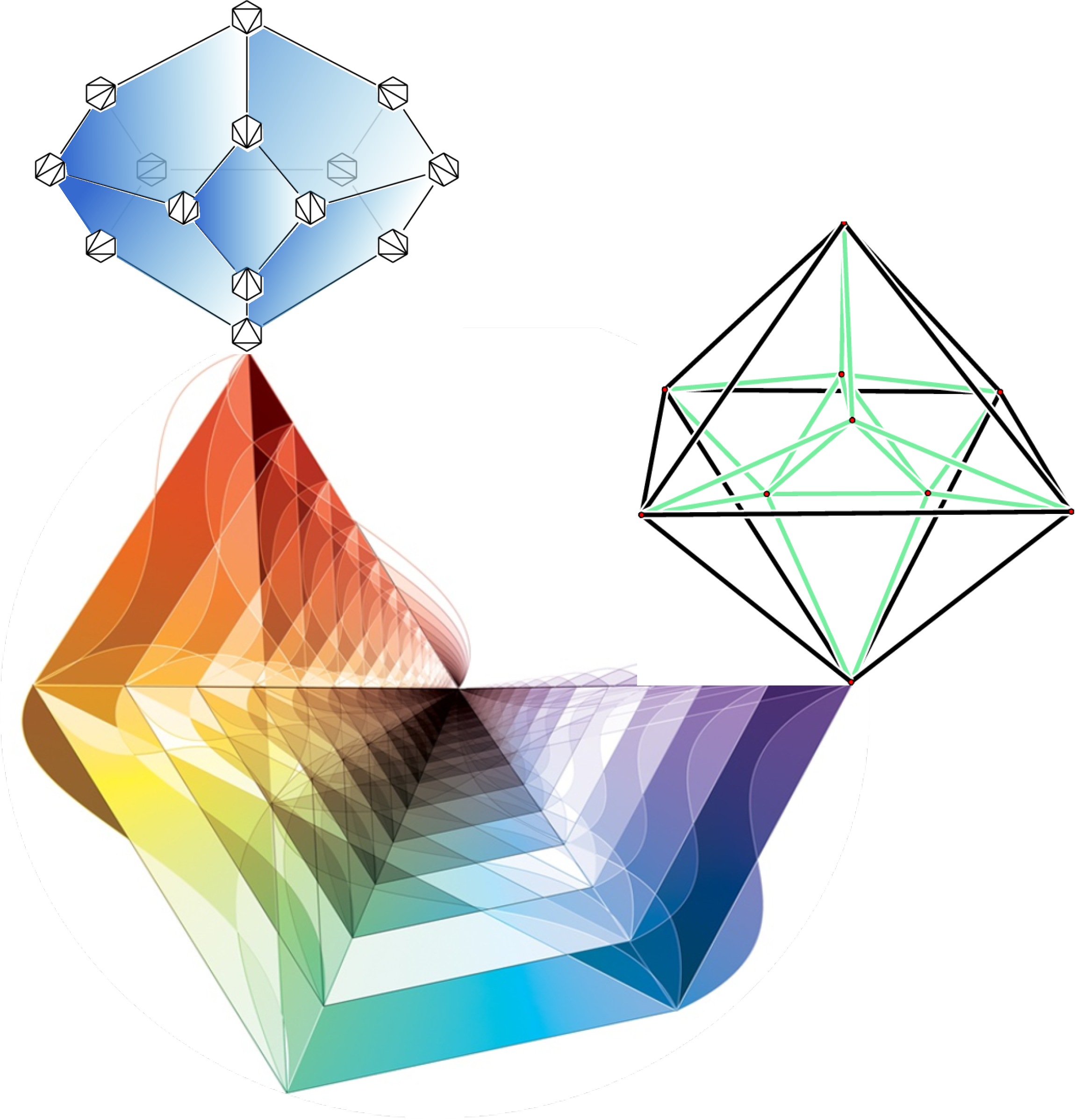

Amplituhedra, Cluster Algebras, and Positive Geometry Dates: May 29-31, 2024 Location: Harvard CMSA, 20 Garden...

Read More

Mathematics and Machine Learning Program Dates: September 3 – November 1, 2024 Location: Harvard CMSA,...

Read MoreGeneral Relativity Seminar Speaker: Zihan Yan, Cambridge University Title: Linearised Second Law for Higher Curvature Gravity and Non-Minimally Coupled Vector Fields Abstract: Expanding the work of arXiv:1504.08040, we show that black holes […]

CMSA Q&A Seminar Speaker: Yannai Gonczarowski, Harvard University Question: What do people mean when they say 'the intersection between theoretical computer science and economic theory'?

Algebraic Geometry in String Theory Seminar Speaker: Jonathan Rosenberg, University of Maryland Title: Derived categories of genus one curves and torsors over abelian varieties Abstract: Studying orientifold string theories on elliptic curves […]

Active Matter Seminar Speaker: Abby Plummer, Boston University Title: Shape morphing with swelling hydrogels and expanding foams Abstract: Materials that increase in size offer intriguing possibilities for shape-morphing applications. Here, […]

CURRENT DEVELOPMENTS IN MATHEMATICS 2024 APRIL 5-6, 2024 HARVARD UNIVERSITY SCIENCE CENTER LECTURE HALL C REGISTER HERE https://www.math.harvard.edu/event/current-developments-in-mathematics-2024/ Speakers: Daniel Cristofaro-Gardiner - University of Maryland Samit Dasgupta - Duke […]

Quantum Matter in Mathematics and Physics Seminar Speaker: Henry Cohn (MIT and Microsoft) Title: Discrete geometry and the modular bootstrap Abstract: In this talk, I'll discuss the remarkable connections between […]

CMSA Member Seminar Speaker: Farzan Vafa Title: Phase diagram and confining strings in a minimal model of nematopolar matter Abstract: We investigate a minimal model of a nematopolar system. We […]

Algebraic Geometry in String Theory Seminar Speaker: Alan Thompson (Loughborough University) Title: Mirror symmetry for fibrations and degenerations of K3 surfaces Abstract: In 2016, Doran, Harder, and I conjectured a mirror […]

CMSA Member Seminar Speaker: Puskar Mondal Title: Global weak solutions of 3+1 dimensional vacuum Einstein equations Abstract: It is important to understand if the `solutions' of non-linear evolutionary PDEs persist […]

Mathematical Aspects of Scattering Amplitudes Program To receive email updates and program announcements, visit this link to sign up for the CMSA Mathematical Aspects of Scattering Amplitudes Program mailing list. […]

General Relativity Seminar Speaker: Xiaoyi Liu, UCSB Title: New Well-Posed Boundary Conditions for Semi-Classical Euclidean Gravity Abstract: We consider four-dimensional Euclidean gravity in a finite cavity. We point out that […]

CMSA Q and A Seminar Speaker: Cengiz Pehlevan, Harvard Question: What is feature learning?

Mathematical Aspects of Scattering Amplitudes Lecture Speaker: Sabrina Pasterski, Perimeter Institute Title: Radiation in Holography

Algebraic Geometry in String Theory Seminar Speaker: Aaron Landesman, MIT Title: Geometric local systems on very general curves Abstract: What is the smallest genus h of a non-isotrivial curve over the […]

Quantum Matter in Mathematics and Physics Seminar Speaker: Chetan Nayak, Microsoft and UCSB Title: Fusion Rule Measurement in a Topological Qubit

CMSA Member Seminar Speaker: Sunghyuk Park, Harvard CMSA Title: 3D quantum trace map Abstract: I will speak about my recent work (joint with Sam Panitch) constructing the 3d quantum trace map, […]

Full Name Role Office # Affiliation Dates Email Address Lionel Mason Scattering Amplitudes Program Participant G05 University of Oxford April 14 - 25, 2024 lmason@maths.ox.ac.uk Jordan Cotler Scattering Amplitudes Program […]

General Relativity Seminar Speaker: Chris Fewster, York University Title: Quantum Energy Inequalities Abstract: Many theorems of mathematical relativity, including singularity and positive mass theorems, include the classical energy conditions among […]

CMSA Q and A Seminar Speaker: Melanie Weber, Harvard Question: What is the Ricci curvature of a graph?

Algebraic Geometry in String Theory Seminar Speaker: Alessandro Chiodo, IMJ-Paris Rive Gauche (Jussieu) Title: The logarithmic double ramification locus Abstract: Given a family of smooth curves C -> S with a line […]

Mathematical Aspects of Scattering Amplitudes Lecture Speaker: Tomasz Taylor, Northeastern University Title: Progress in Yang-Mills-Liouville Theory

Quantum Matter in Mathematics and Physics Seminar Speaker: Jesse Thaler, MIT Title: What Observables are Safe to Calculate? Abstract: In collider physics, perturbative quantum field theory is the workhorse framework […]

The CMSA will be hosting a Workshop on Global Categorical Symmetries from April 29–May 3, 2024. Participation in the workshop is by invitation. The workshop will hold three Symmetry […]

Colloquium Speaker: Lance Dixon (SLAC, Stanford University) Title: The DNA of Particle Scattering Abstract: At the Large Hadron Collider, the copious scattering of quarks and gluons in quantum chromodynamics (QCD) produces Higgs […]

Speaker: Lakshminarayanan Mahadevan Question: What is morphogenesis? (Morphogenesis: geometry and biology)

Mathematical Aspects of Scattering Amplitudes Lecture Speaker: Daniil Rudenko, U Chicago Title: Introduction to Cluster Polylogarithms

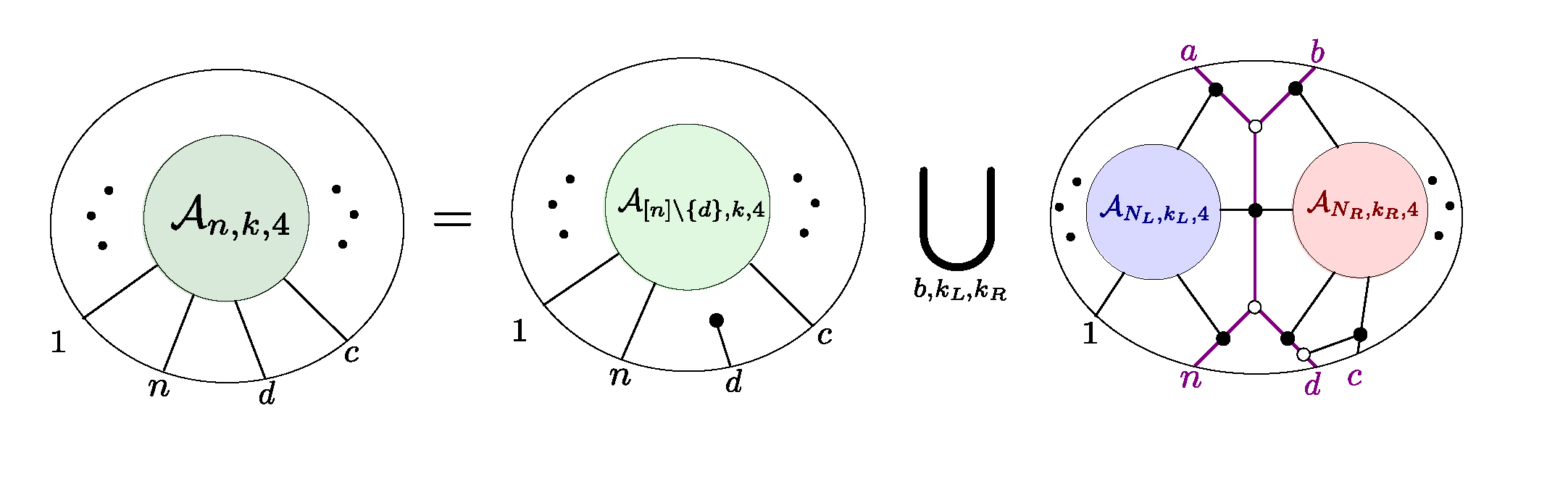

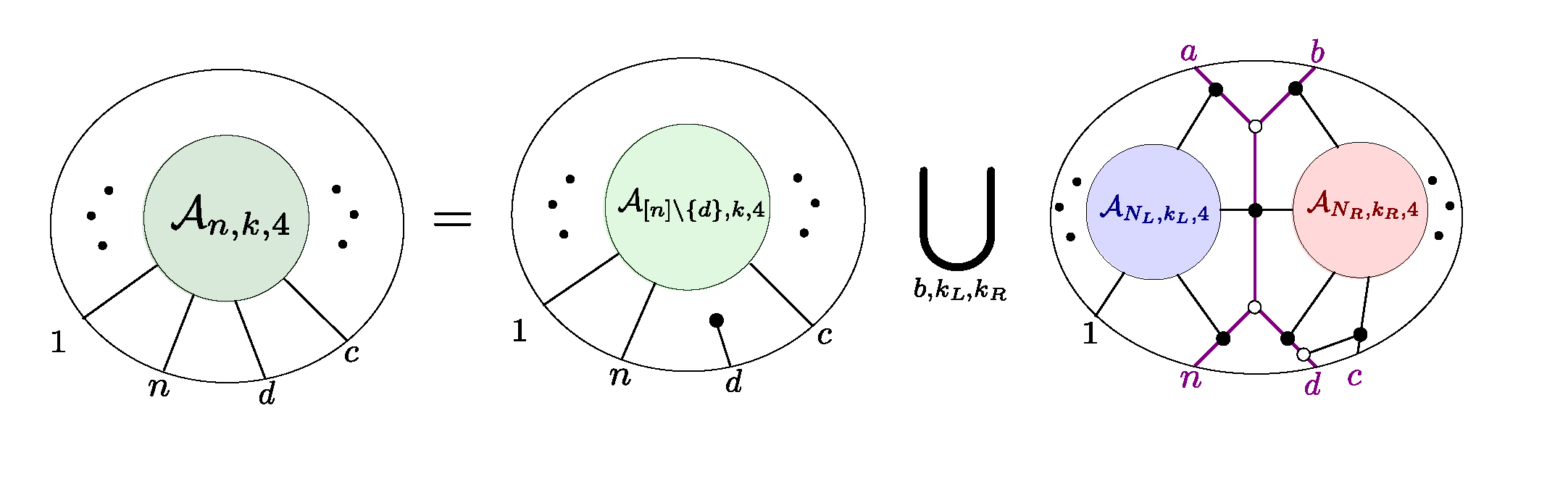

Mathematical Aspects of Scattering Amplitudes Lecture Speaker: Jaroslav Trnka, University of California, Davis Title: Loops of loops expansion in the Amplituhedron